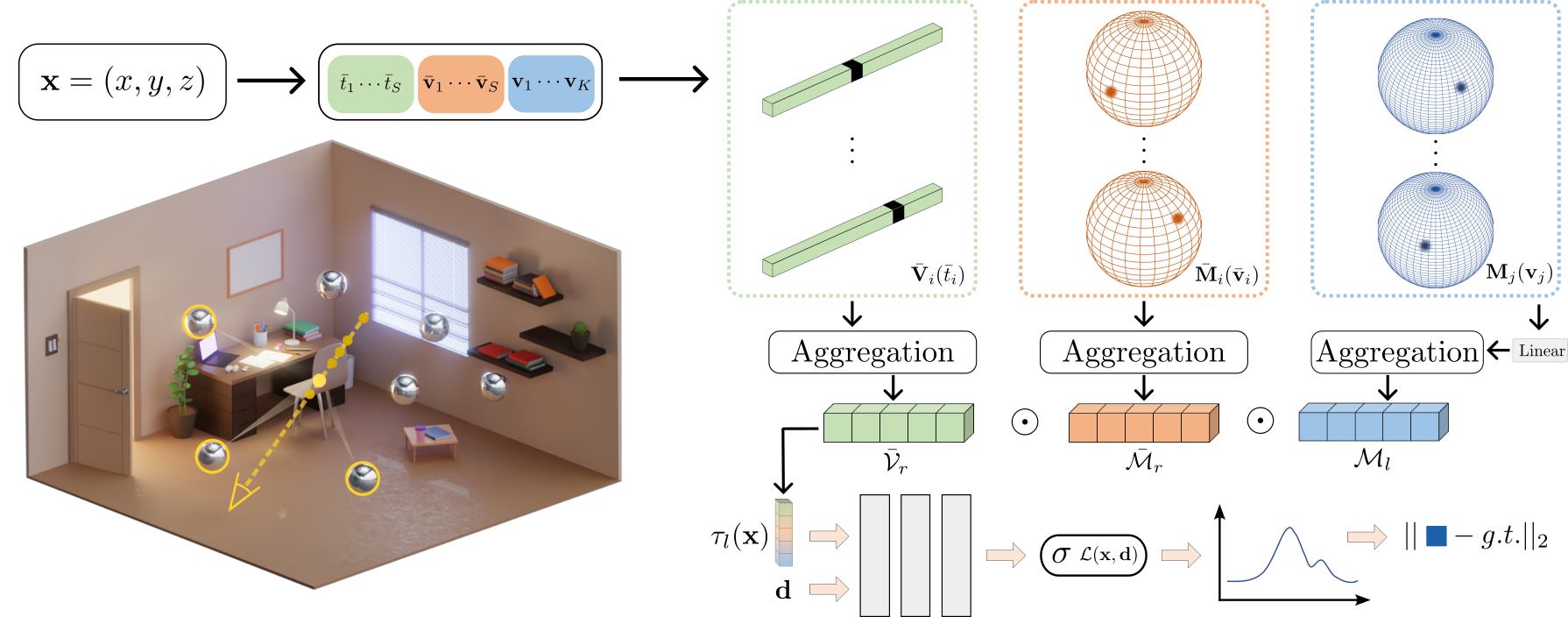

We present NeLF-Pro, a novel representation for modeling and reconstructing light fields in diverse natural scenes that vary in extend and spatial granularity. In contrast to previous fast reconstruction methods that represent the 3D scene globally, we model the light field of a scene as a set of local light field feature probes, parameterized with position and multi-channel 2D feature maps. Our central idea is to bake the scene's light field into spatially varying learnable representations and to query point features by weighted blending of probes close to the camera - allowing for mipmap representation and rendering. We introduce a novel vector-matrix-matrix (VMM) factorization technique that effectively represents the light field feature probes as products of core factors (i.e., VM) shared among local feature probes, and a basis factor (i.e., M) - efficiently encoding internal relationships and patterns within the scene. Experimentally, we demonstrate that NeLF-Pro significantly boosts the performance of feature grid-based representations, and achieves fast reconstruction with better rendering quality while maintaining compact modeling.

Our approach models a scene using light field probes represented by a set of core vectors, core matrices as well as a set of basis matrices. We project the sample points onto local probes to obtain local spherical coordinates and then query factors from probes near the camera. These factors are then aggregated using a self-attention mechanism and combined using Hadamard products. The resulting aggregated feature vector and the viewing direction are used to calculate the volume density and the light field radiance.

@inproceedings{You2024CVPR,

author = {Zinuo You and Andreas Geiger and Anpei Chen},

title = {NeLF-Pro: Neural Light Field Probes for Multi-Scale Novel View Synthesis},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024}

}